The Current State

Two Critical Issues

1. The Specificity Problem

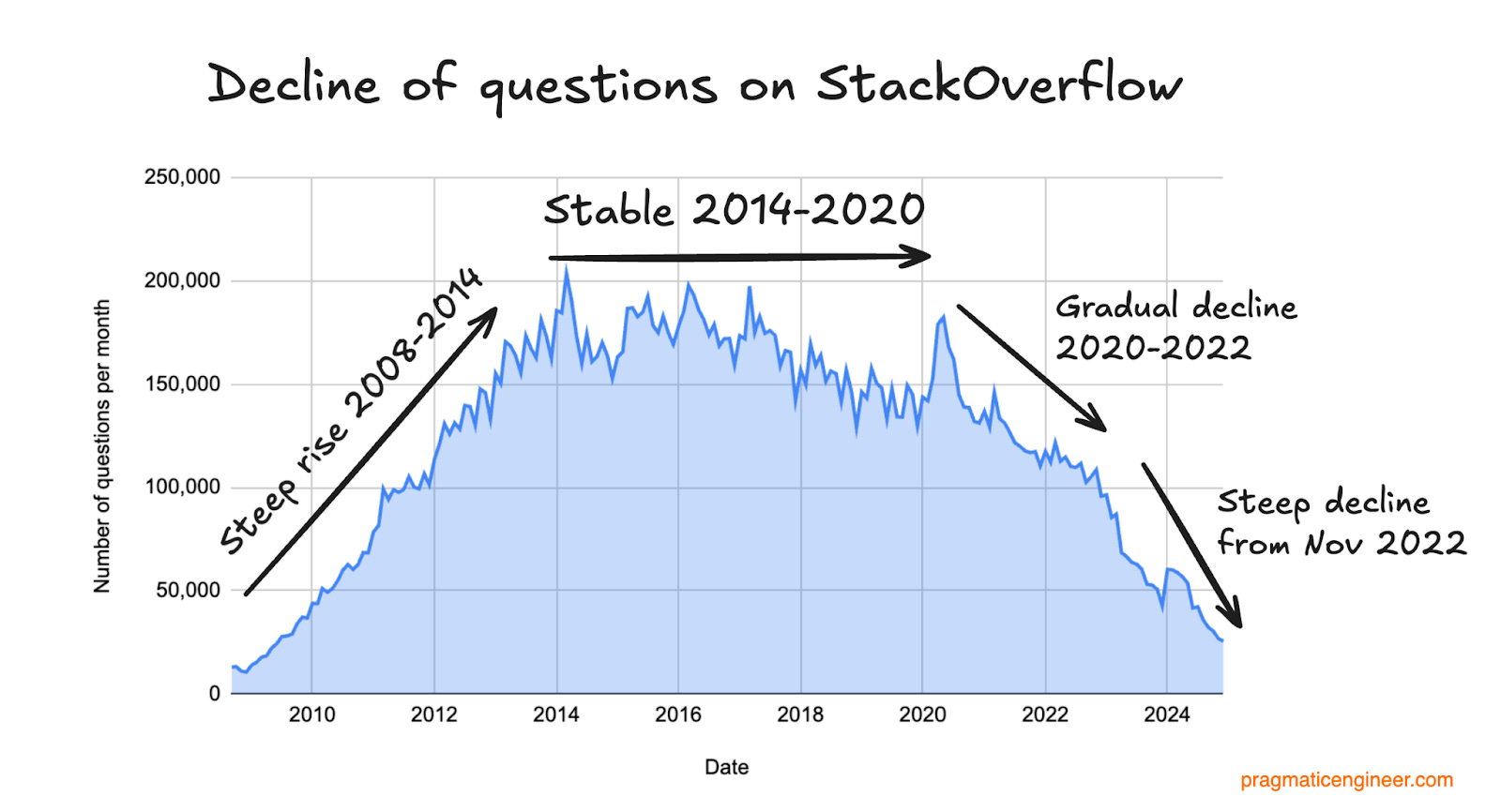

StackOverflow has long been the go-to resource for developers seeking solutions to specific problems. However, while these solutions are specific to certain bugs or errors, they often come with discrepancies that can render them ineffective for your particular case.

LLMs, on the other hand, provide the specificity we demand. They can address your exact problem without discrepancies, offering tailored solutions based on your specific context and requirements.

2. The Context Limitation

Despite their advantages, LLMs have a significant limitation: they lack global context. Unless you’re using tools like Cursor (which scans all files) or explicitly uploading every relevant file to the LLM’s context, they may not fully diagnose and resolve issues that span multiple files.

This becomes particularly challenging when:

- The issue originates in a different file

- Environment variables are involved (which can be sensitive to share)

- The problem requires understanding of the broader system architecture

The Path Forward for StackOverflow

The survival of StackOverflow likely depends on its ability to transform its extensive database of solutions into an LLM-powered platform. By:

- Sourcing all StackOverflow solutions into an LLM

- Training the LLM on this database

- Integrating with existing models like Claude or GPT

This could create the most powerful debugging tool available to engineers, combining:

- StackOverflow’s vast knowledge base

- LLM’s ability to provide contextual, specific solutions

- The community’s collective expertise

Without such adaptation, StackOverflow risks becoming a relic of the past as developers increasingly turn to LLM-powered solutions for their debugging needs.

Last updated on May 14, 2025 at 12:17 AM UTC+7.